Chapters

Classification

Classification: Evaluation Metrics

Chapters

Table of Contents

Basics

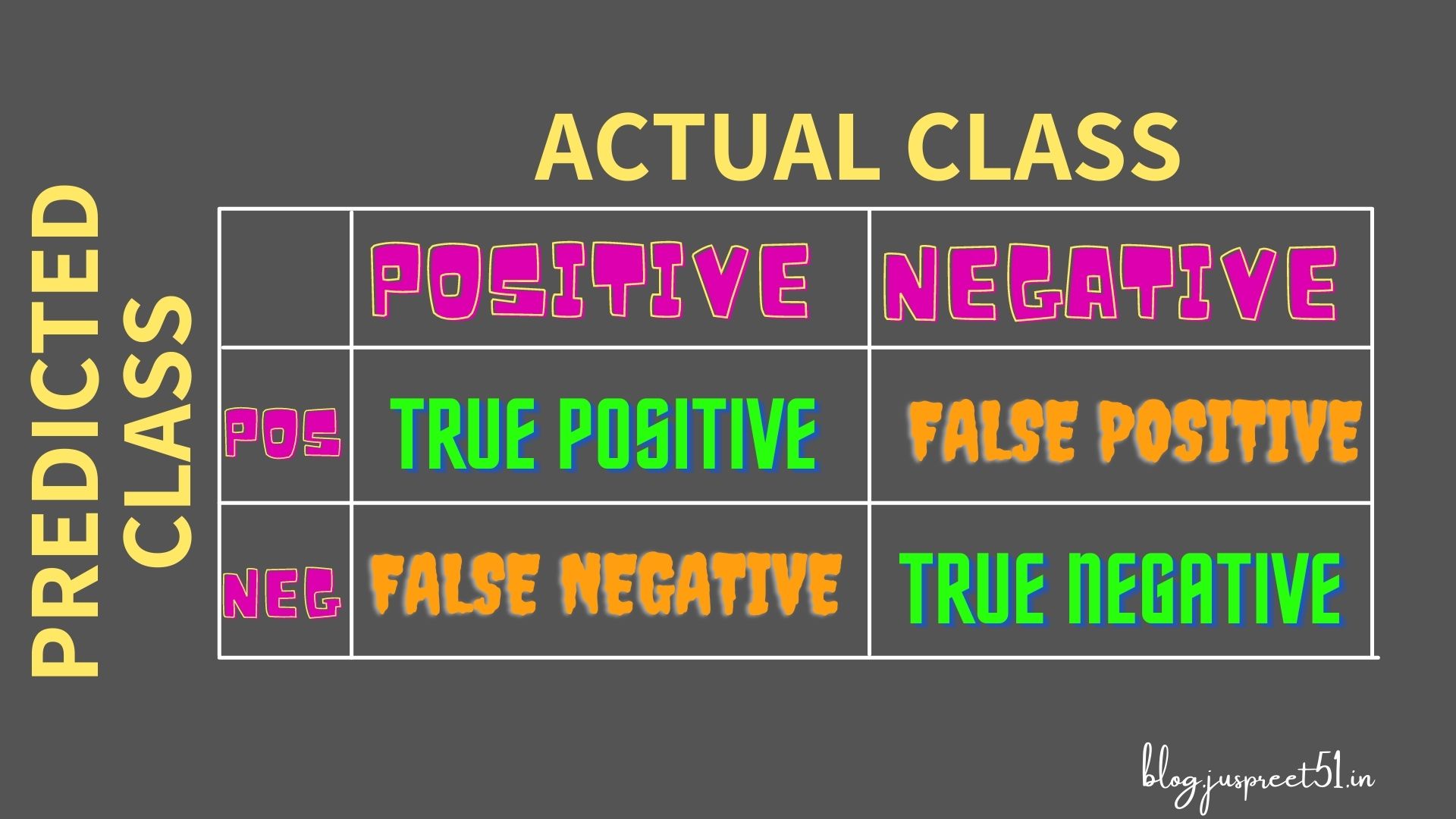

Confusion Matrix:

Type-I & Type-II Errors:

Evaluation Metrics

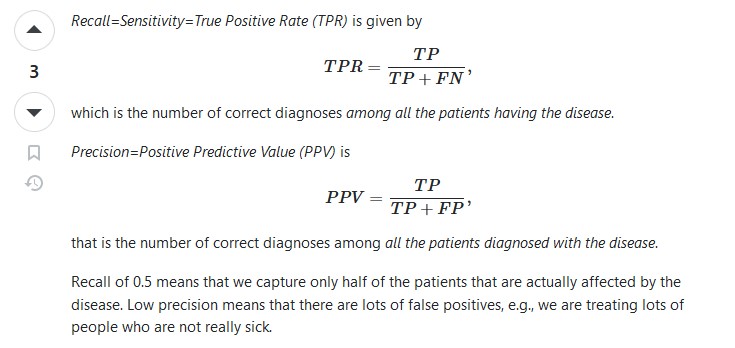

Precision & Recall:

Explanation 1:

Precision is about out of all the diagnosed, what proportion of patients are actually

sick

And, Recall is about out of all the patients that are actually sick, how many were we able to

diagnoise as sick

Explanation 2:

Fishes in Pond analogy (credits: reddit.com/u/question_23)

Imagine you're fishing and you have been informed there are a total of 100 fishes in the pond.

Out of those 100 fishes present in the pond, you manage to catch 80, so your Recall is

But you also get 80 rocks in your net. So, the total number of things retrived in your fish net

is 80 Fishes + 80 Stones/Junk = 160 things, so your Precision is

** Note: Both Precision & Recall are bothered about the retrived fish's count

However, Precision is about of all the things in the net, what proportion of things in

net are fish (something that we wanted to catch)

And, Recall is about what proportion of fish we were able to retrive from the whole pond

Precision & Recall Trade-off

You could use a smaller net and target one pocket of the lake where there are lots of fish and

no rocks, but you might only get 20 of the fish in order to get 0 rocks.

That is 20% recall but 100% precision.

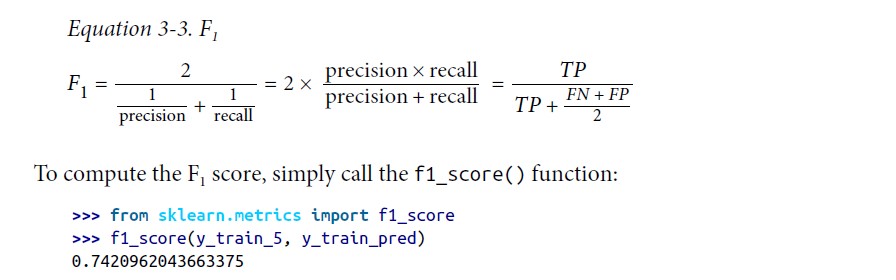

F1-Score:

The F1 score is

the harmonic mean of precision and recall (Equation 3-3). Whereas the regular mean

treats all values equally, the harmonic mean gives much more weight to low values.

As a result, the classifier will only get a high F1 score if both recall and precision are

high

ROC & AUC Curve

Sensitivity / True Positive Rate / Recall: Sensitivity tells us what

proportion of the positive class got correctly classified

Sensitivity is the ability of a test to correctly identify patients with the disease, e.g. the

percentage of sick persons who are correctly identified as having a disease

100/100 sick people in a crowd identified, then its a 100% Sensitivity. Low Sensitivity gives

TypeII-Error

Specificity / True Negative Rate: Specificity tells us what proportion of the

negative class got

correctly classified

100/100 healthy people in a crowd identified, then its a 100% Specificity

E.g. the percentage of healthy people who are correctly identified as not having the disease

False Negative Rate: FNR tells us what proportion of the positive class got incorrectly

classified by the classifier

False Positive Rate: FPR tells us what proportion of the negative class got incorrectly

classified by the classifier